regularization machine learning mastery

Weights of first layer W2. Weight regularization is a technique for imposing constraints such as L1 or.

Tensorflow 2 Tutorial Get Started In Deep Learning With Tf Keras

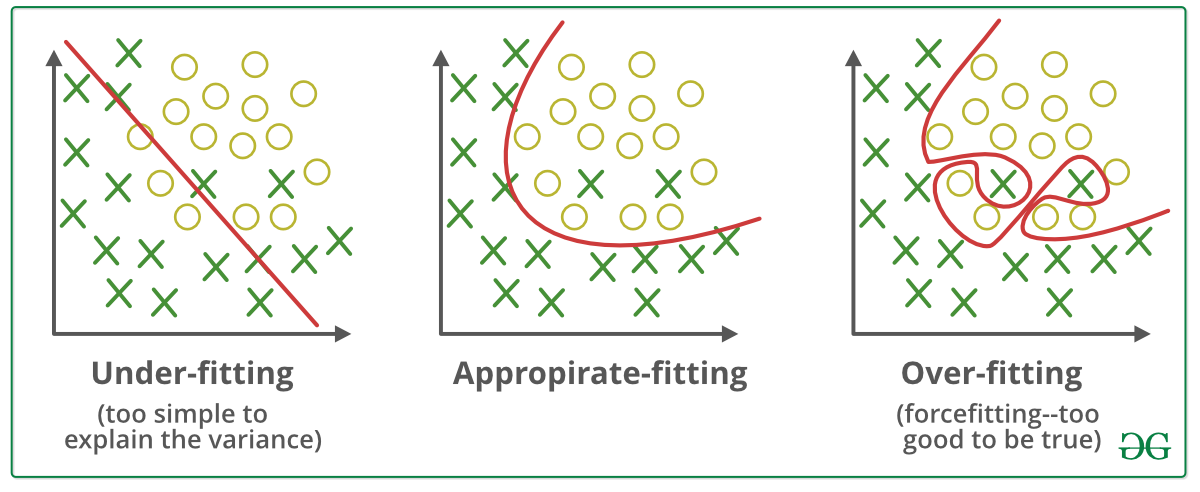

Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting.

. In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero. Regularization is a concept much older than deep learning and an integral part of classical statistics. The key difference between these two is the penalty term.

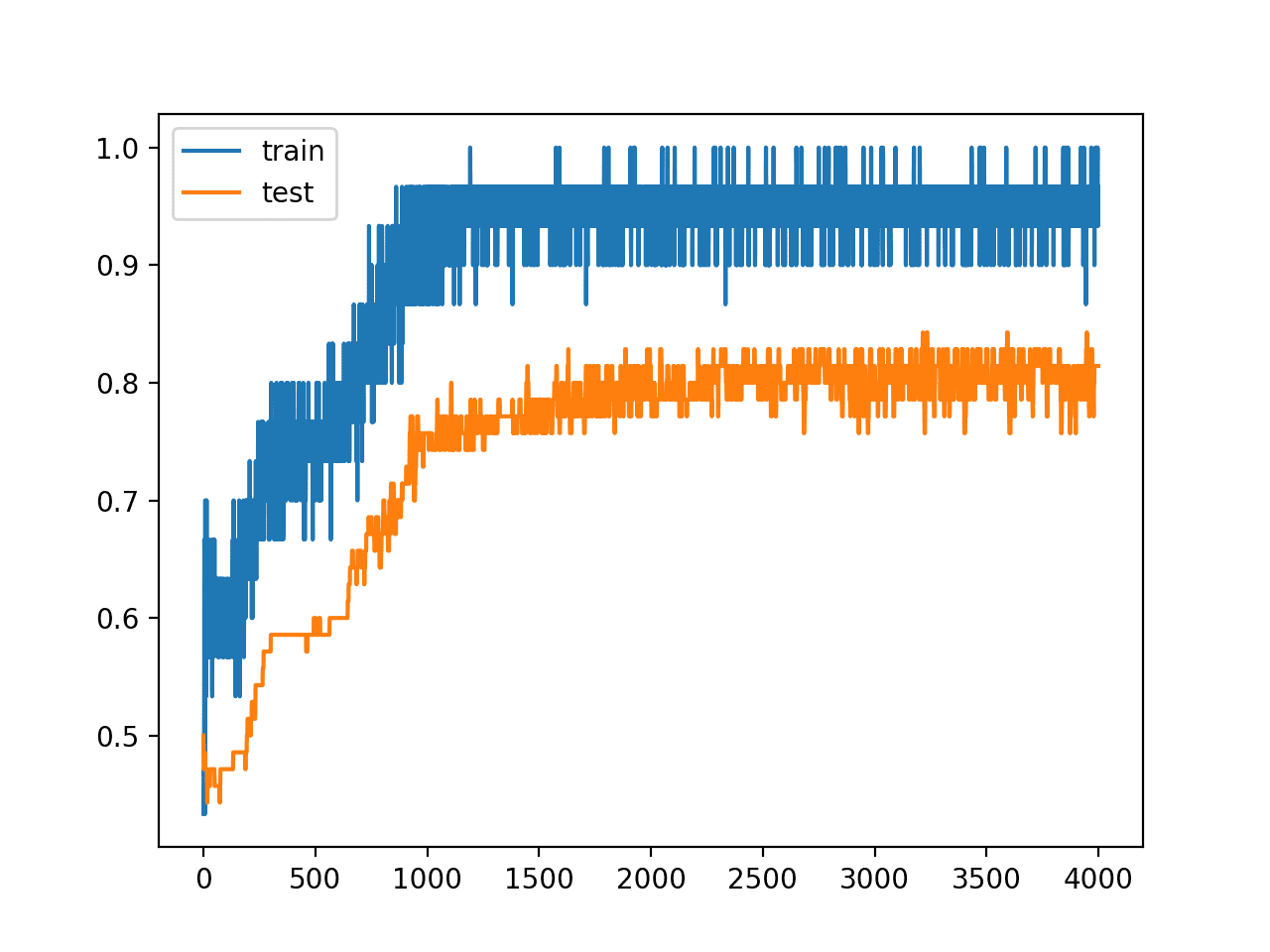

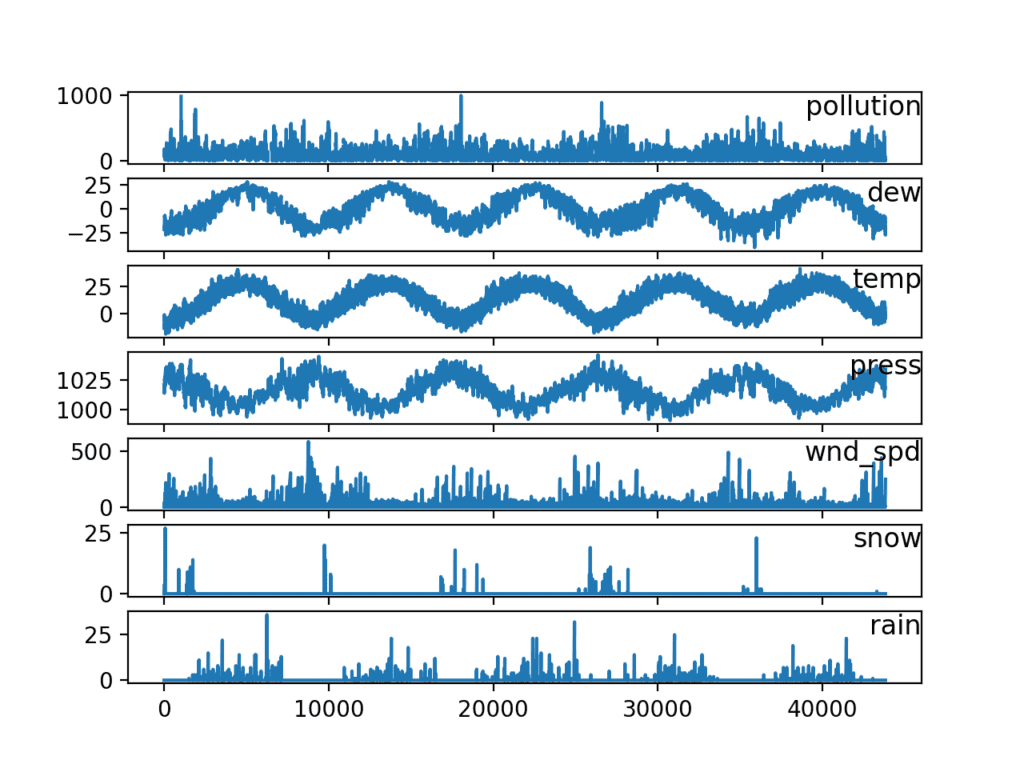

Long Short-Term Memory LSTM models are a recurrent neural network capable of learning sequences of observations. In their 2014 paper Dropout. Ad Browse Discover Thousands of Computers Internet Book Titles for Less.

Below is an example of creating a dropout layer with a 50 chance of setting inputs to zero. I have covered the entire concept in two parts. This may make them a network well suited to time series forecasting.

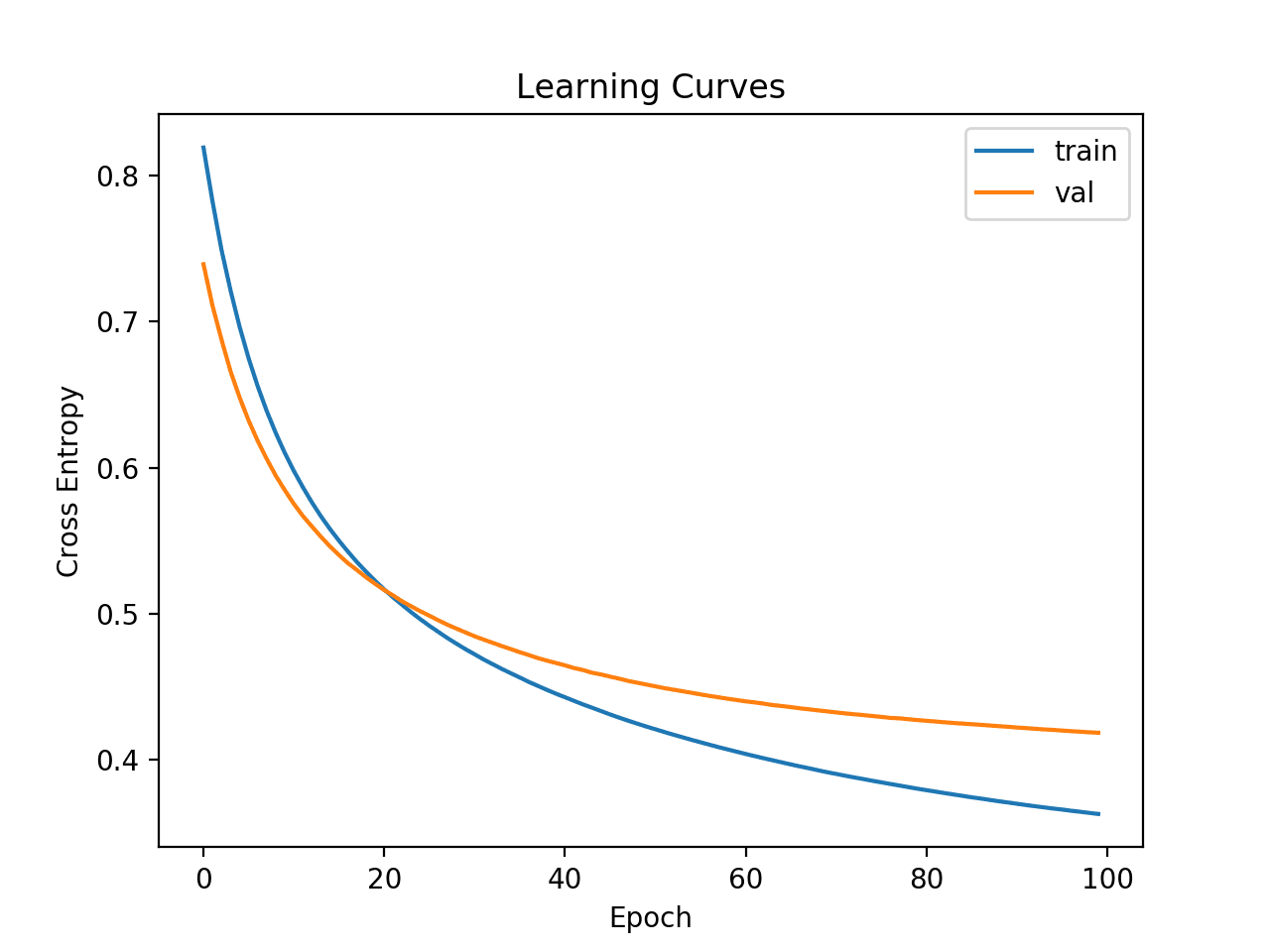

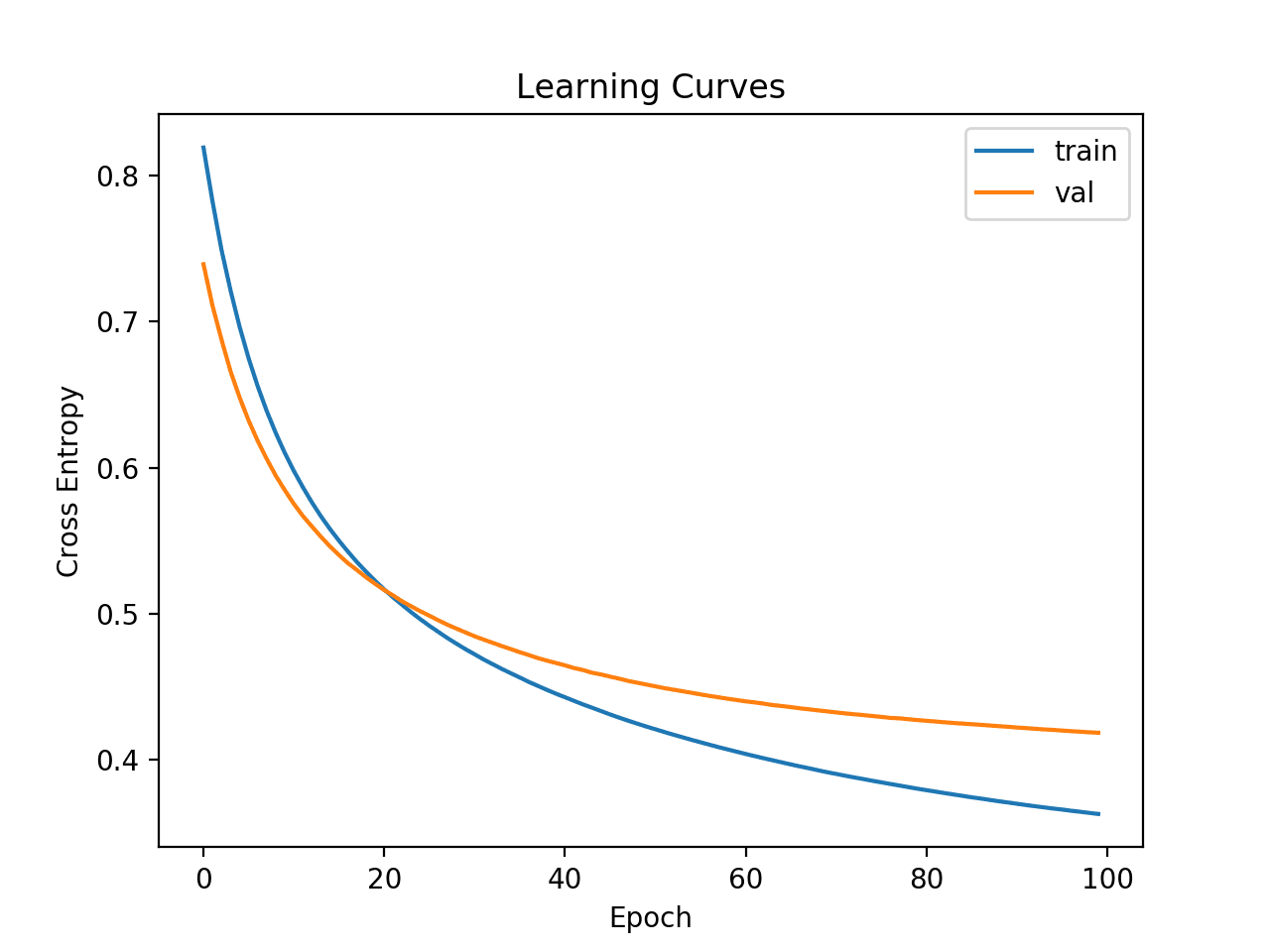

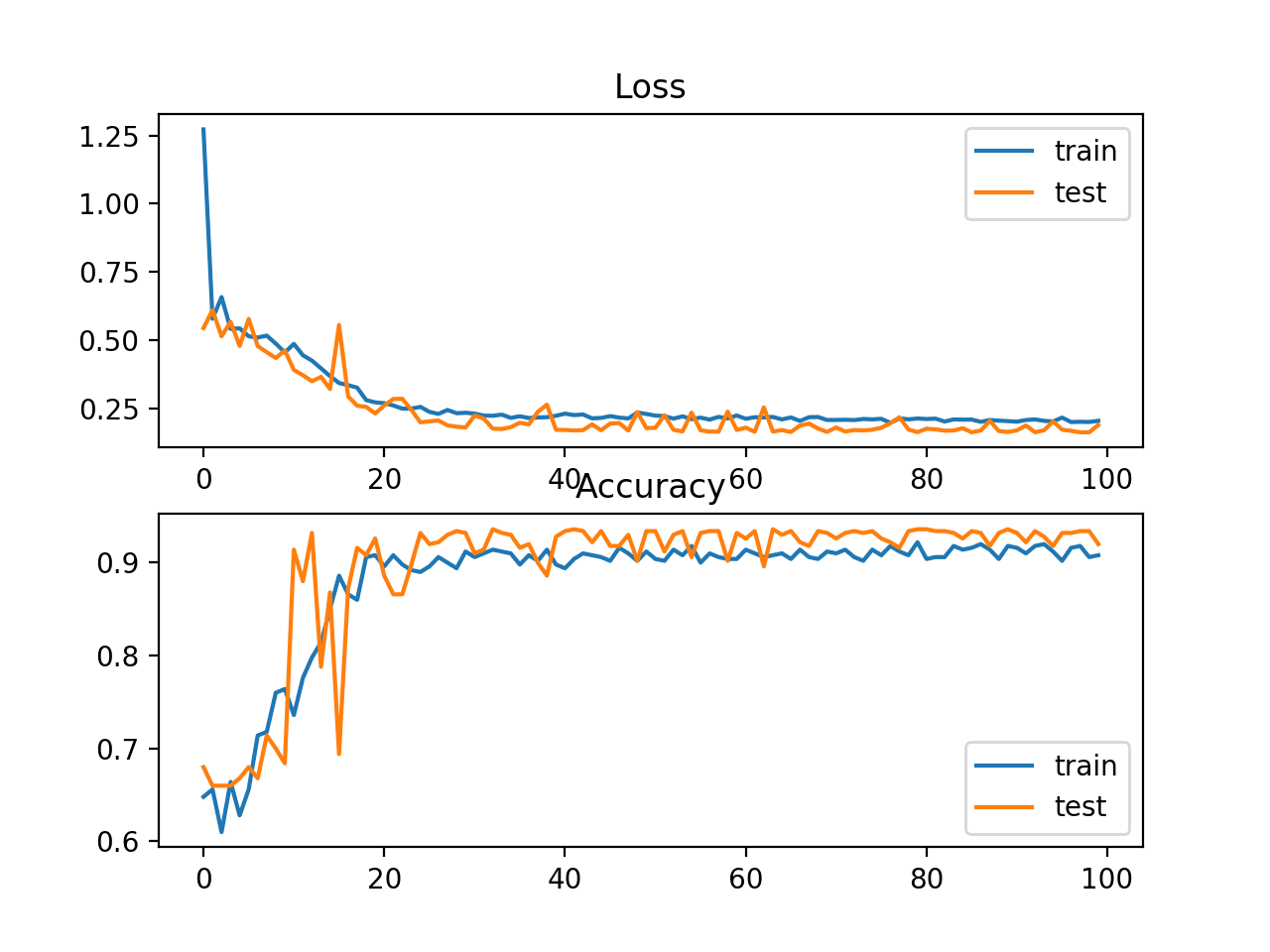

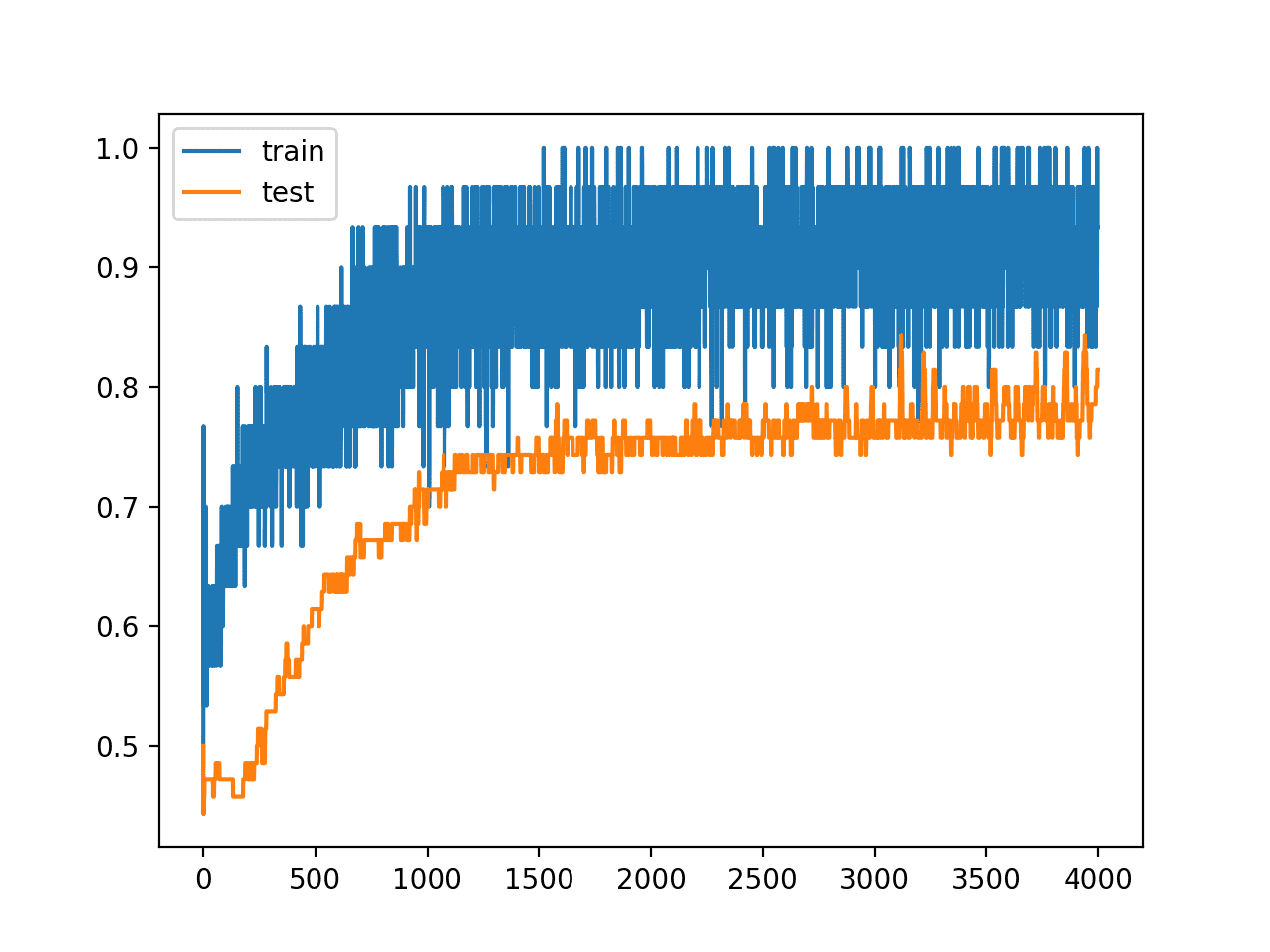

An issue with LSTMs is that they can easily overfit training data reducing their predictive skill. This technique prevents the model from overfitting by adding extra information to it. Regularization is any modification we make to a learning algorithm that is intended to reduce its generalization error but not its training error If.

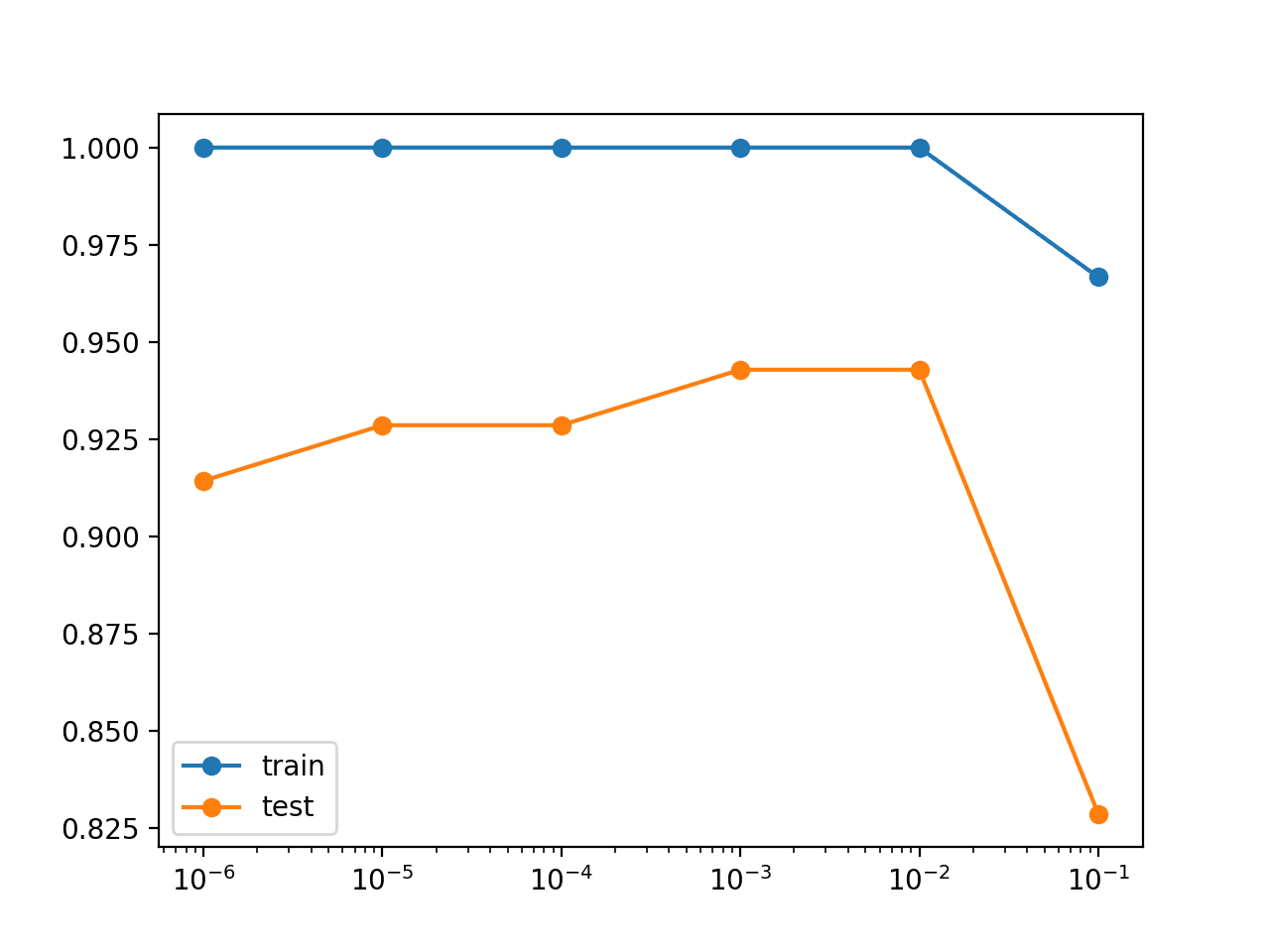

Of second layer It corresponds to a prior of the form An improper prior which cannot be normalized Leads to difficulties in selecting regularization coefficients. X1 X2Xn are the features for Y. Regularization is the most used technique to penalize complex models in machine learning it is deployed for reducing overfitting or contracting generalization errors by putting network weights small.

Dropout is a technique where randomly selected neurons are ignored during training. Ridge regression adds squared magnitude of coefficient as penalty term to the loss function. Any machine learning expert would strive to make their models accurate and error-free.

What is Regularization. Regularization works by adding a penalty or complexity term to the complex model. This happens because your model is trying too hard to capture the noise in your training dataset.

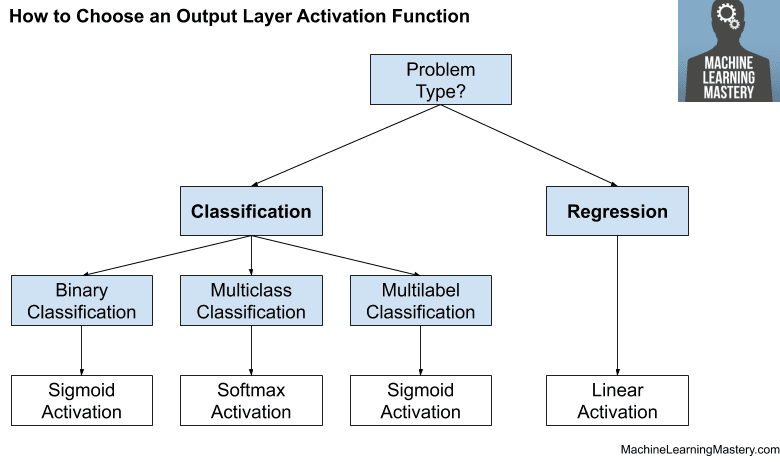

Therefore when a dropout rate of 08 is suggested in a paper retain 80 this will in fact will be a dropout rate of 02 set 20 of inputs to zero. A regression model that uses L1 regularization technique is called Lasso Regression and model which uses L2 is called Ridge Regression. The model will have a low accuracy if it is overfitting.

This is exactly why we use it for applied machine learning. In the above equation Y represents the value to be predicted. We all know Machine learning is about training a model with relevant data and using the model to predict unknown data.

Layer Dropout 05 1. Also it enhances the performance of models for new inputs. It is a form of regression that shrinks the coefficient estimates towards zero.

To review open the file in an editor that reveals hidden Unicode characters. Part 2 will explain the part of what is regularization and some proofs related to it. Regularization is one of the basic and most important concept in the world of Machine Learning.

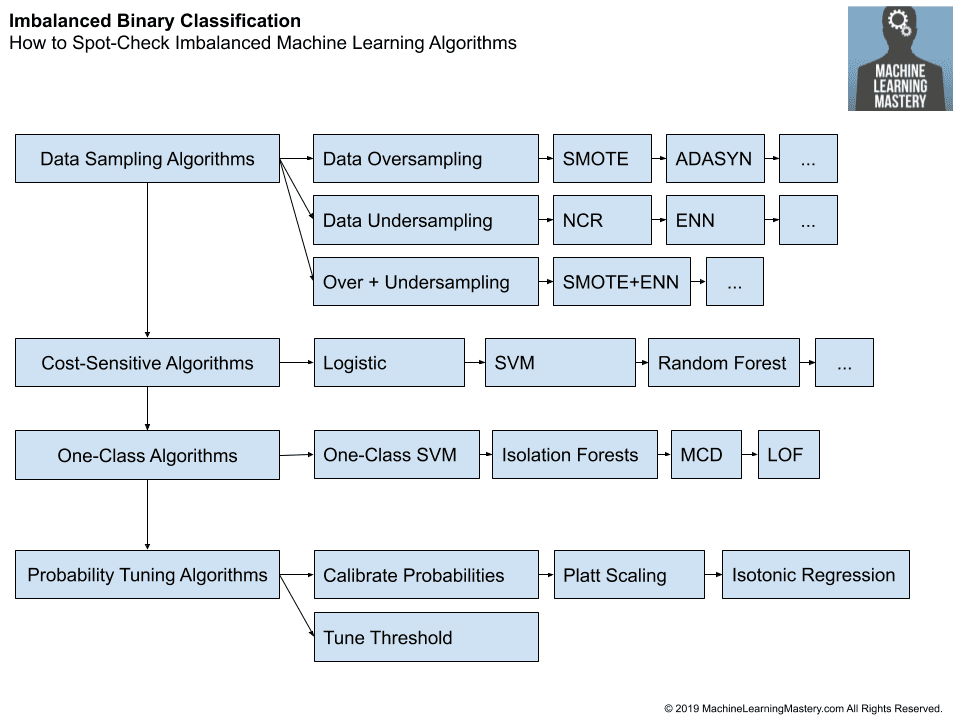

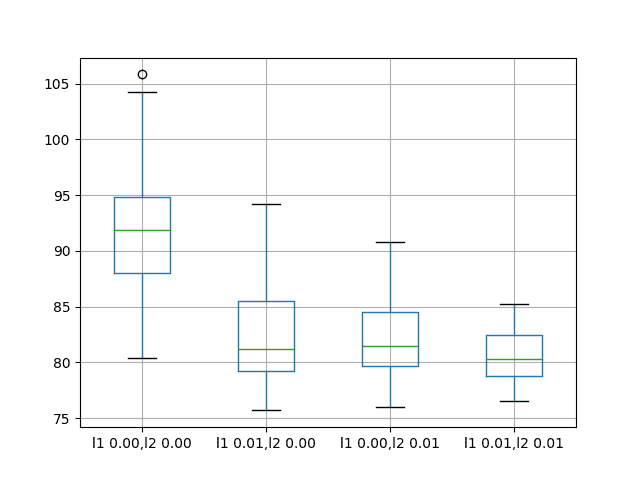

L1 regularization L2 regularization Dropout regularization This article focus on L1 and L2 regularization. A Simple Way to Prevent Neural Networks from Overfitting download the PDF. Each regularization method is marked as a strong medium and weak based on how effective the approach is in addressing the issue of overfitting.

Weight decay and activity regularization eg. Based on the approach used to overcome overfitting we can classify the regularization techniques into three categories. By the word unknown it means the data which the model has not seen yet.

Part 1 deals with the theory regarding why the regularization came into picture and why we need it. Dropout is a regularization technique for neural network models proposed by Srivastava et al. This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below.

In general regularization means to make things regular or acceptable. Types of Regularization. Lets consider the simple linear regression equation.

Still it is often not entirely clear what we mean when using the term regularization and there exist several competing. This additional term keeps the coefficients from taking extreme values thus balancing the excessively fluctuating function. It has arguably been one of the most important collections of techniques fueling the recent machine learning boom.

It is one of the most important concepts of machine learning. Regularization is a form of regression that adjusts the error function by adding another penalty term. Machine LearningEquivalent prior for neural networkSrihari Regularizerinvariant to rescaled weightsbiases where W1.

Regularization in Machine Learning One of the major aspects of training your machine learning model is avoiding overfitting. Dropout is more effective than other standard computationally inexpensive regularizers such as weight decay filter norm constraints and sparse activity regularization. The commonly used regularization techniques are.

Regularization is a process of introducing additional information in order to solve an ill-posed problem or to prevent overfitting Basics of Machine Learning Series Index The intuition of regularization are explained in the previous post. Dropout works well in practice perhaps replacing the need for weight regularization eg. β0β1βn are the weights or magnitude attached to the features.

The cost function for a regularized linear equation is given by. Dropout Regularization For Neural Networks. For understanding the concept of regularization and its link with Machine Learning we first need to understand why do we need regularization.

How To Improve Deep Learning Model Robustness By Adding Noise

Weight Regularization With Lstm Networks For Time Series Forecasting

A Tour Of Machine Learning Algorithms

Multivariate Time Series Forecasting With Lstms In Keras

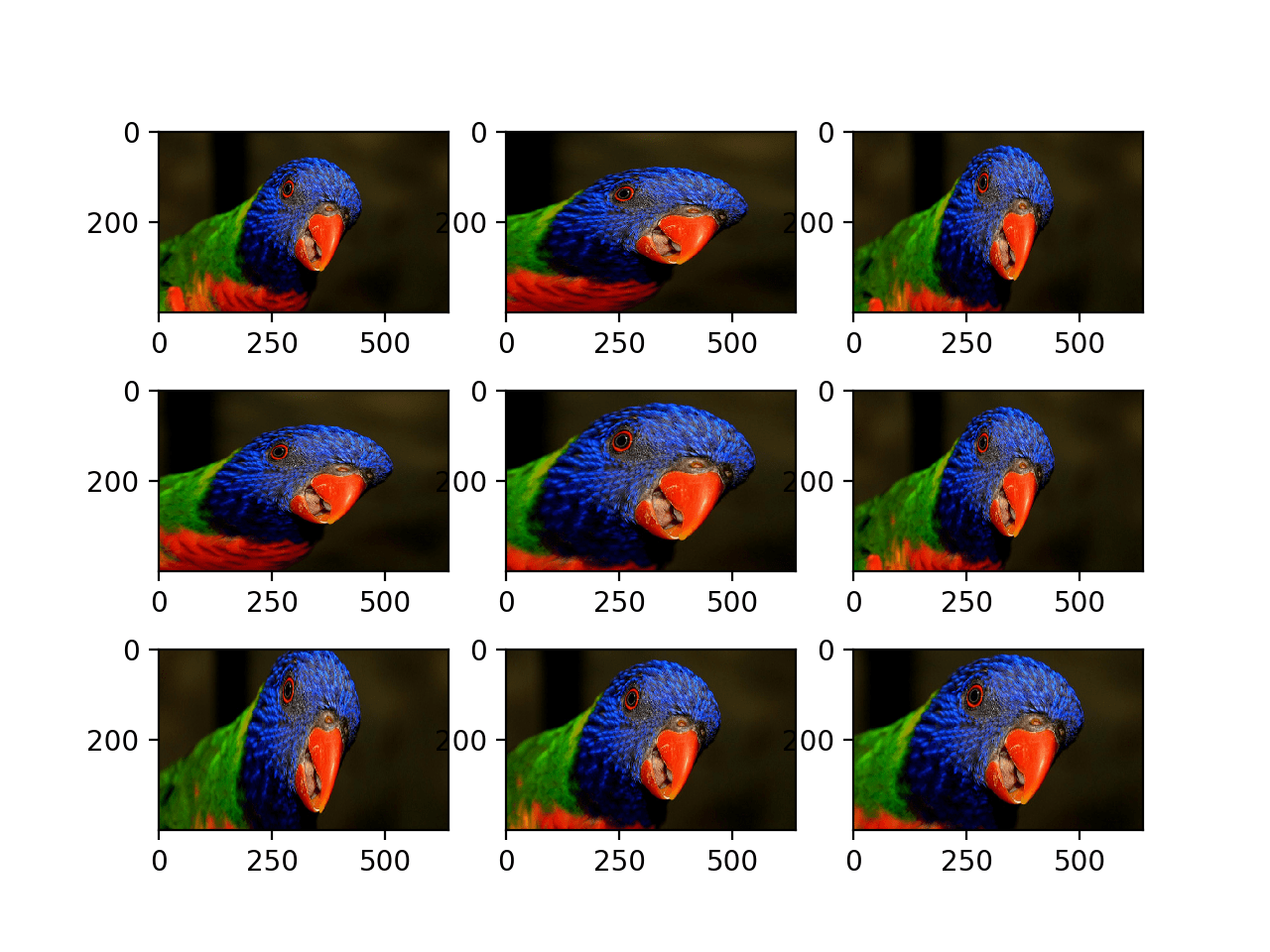

How To Configure Image Data Augmentation In Keras

A Gentle Introduction To The Rectified Linear Unit Relu

How To Improve Performance With Transfer Learning For Deep Learning Neural Networks

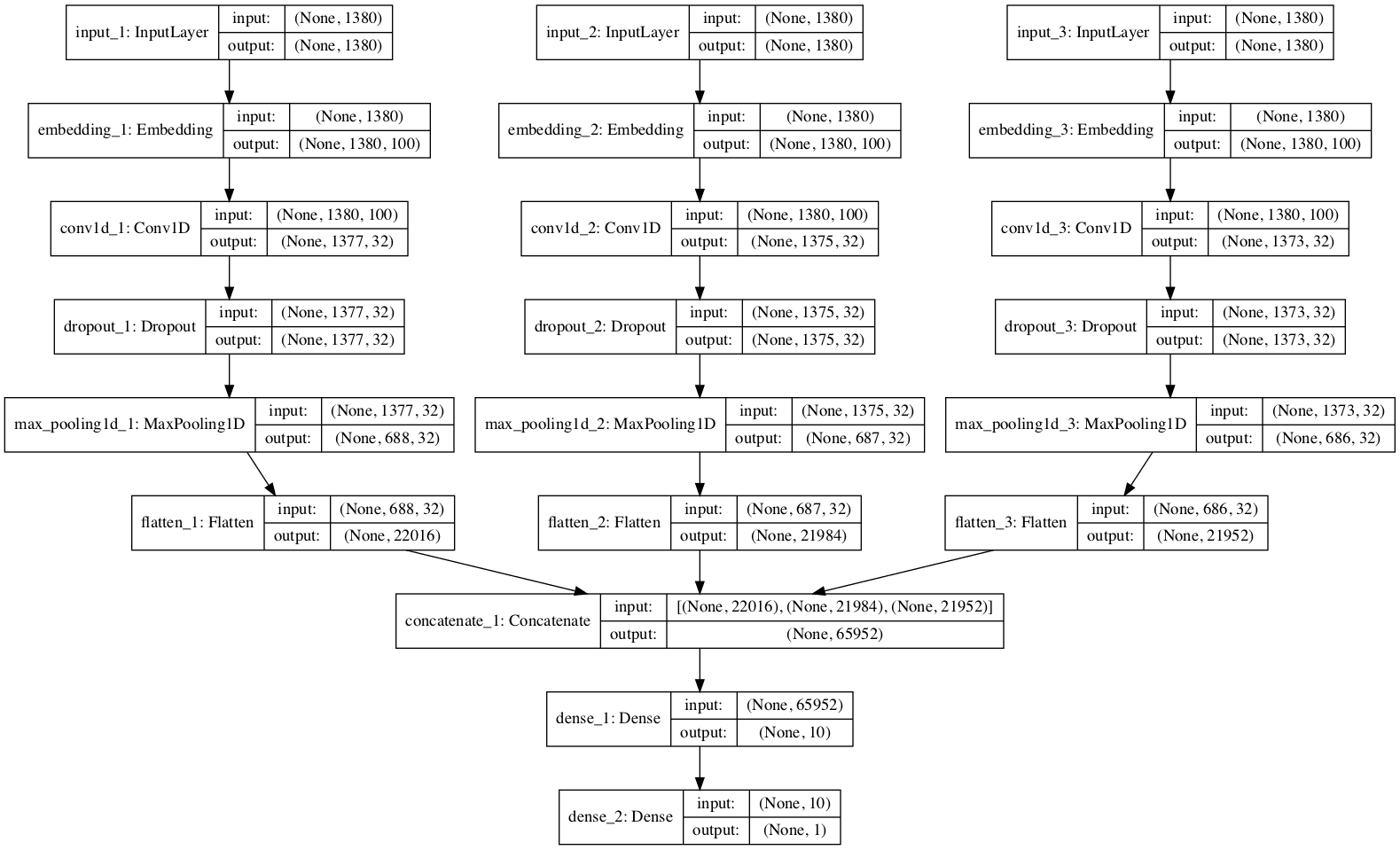

How To Develop A Multichannel Cnn Model For Text Classification

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

![]()

Machine Learning Mastery Workshop Enthought Inc

Weight Regularization With Lstm Networks For Time Series Forecasting

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

Linear Regression For Machine Learning

Day 3 Overfitting Regularization Dropout Pretrained Models Word Embedding Deep Learning With R

A Tour Of Machine Learning Algorithms

Issue 4 Out Of The Box Ai Ready The Ai Verticalization Revue

Start Here With Machine Learning

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks